Spark Df, Flatten Df

BEST COLLECTION OF NON-VEG JOKES IN HINDI/ADULT JOKES IN HINDI SEXY JOKES IN HINDI - The younger generation shows a keen interest in some naughty things. Hindi non veg jokes. Read new non veg jokes in hindi. Adult hindi jokes, funny jokes in hindi. Below are the best collection of new non veg jokes in hindi. New non veg jokes are best medicine to make you feel good kitani kathor vidanabana hai kudarat. Browse the Latest Non Veg Jokes, Non Veg Chutkule and Adult Non Veg Jokes in Hindi including Girlfriend Boyfriend Non Veg Jokes, Pati Patni Sexy Jokes, 18+ Non Veg Funny Jokes, Double Meaning Non Veg SMS and Sardar Non Veg Whatsapp Messages in Hindi. Non Veg Jokes Khet Me Sex Jokes. Most humorous non veg jokes in Hindi set for non veg jokes fans. All of this chutkule, jokes, and SMS maybe not a grownup material or not any sort of vulgarity. You are able to state its dual meaning jokes. Most of us recognize that young generation similar to this kind of jokes and mindset and thinking.

Spark/Scala: Convert or flatten a JSON having Nested data with Struct/Array to columns (Question) January 9, 2019 Leave a comment Go to comments The following JSON contains some attributes at root level, like ProductNum and unitCount.

I am improving my previous answer and offering a solution to my own problem stated in the comments of the accepted answer.This accepted solution creates an array of Column objects and uses it to select these columns. In Spark, if you have a nested DataFrame, you can select the child column like this: df.select('Parent.Child') and this returns a DataFrame with the values of the child column and is named Child.

Spark Df Flatten Df Pdf

But if you have identical names for attributes of different parent structures, you lose the info about the parent and may end up with identical column names and cannot access them by name anymore as they are unambiguous.This was my problem.I found a solution to my problem, maybe it can help someone else as well. I called the flattenSchema separately: val flattenedSchema = flattenSchema(df.schema)and this returned an Array of Column objects. Instead of using this in the select, which would return a DataFrame with columns named by the child of the last level, I mapped the original column names to themselves as strings, then after selecting Parent.Child column, it renames it as Parent.Child instead of Child (I also replaced dots with underscores for my convenience): val renamedCols = flattenedSchema.map(name = col(name.toString).as(name.toString.replace('.' ,')))And then you can use the select function as shown in the original answer: var newDf = df.select(renamedCols:.). @ukbaz Of course, it takes all nested child properties and flattens them schema-wise, which actually means that they are now a separate column for the dataframe.

Spark Df Flatten Df 10

That was the goal of my solution. I am struggling to understand what do you need exactly. If you have columns ID, Person, Address but schema is like: 'ID', 'Person.Name', 'Person.Age', 'Address.City', 'Address.Street', 'Address.Country', then by flattening, the initial 3 columns create 6 columns. What's the result you would want based on my example?–Sep 28 '17 at 8:35.

Thanks for your reply @V.Samma. Based on your example I get the following; 'ID', 'Person.Name', 'Person.Age', 'Address.City', 'Address.Street', 'Address.Country', 'ID1', 'Person.Name1', 'Person.Age1', 'Address.City1', 'Address.Street1', 'Address.Country1','ID2', 'Person.Name2', 'Person.Age2', 'Address.City2', 'Address.Street2', 'Address.Country2'. Etc this goes on. What I would like is those new columns to be Rows in my dataframe, so the data in 'ID1' and 'ID2' would be under the ID column. Thanks–Sep 28 '17 at 9:21. Just wanted to share my solution for Pyspark - it's more or less a translation of @David Griffin's solution, so it supports any level of nested objects.

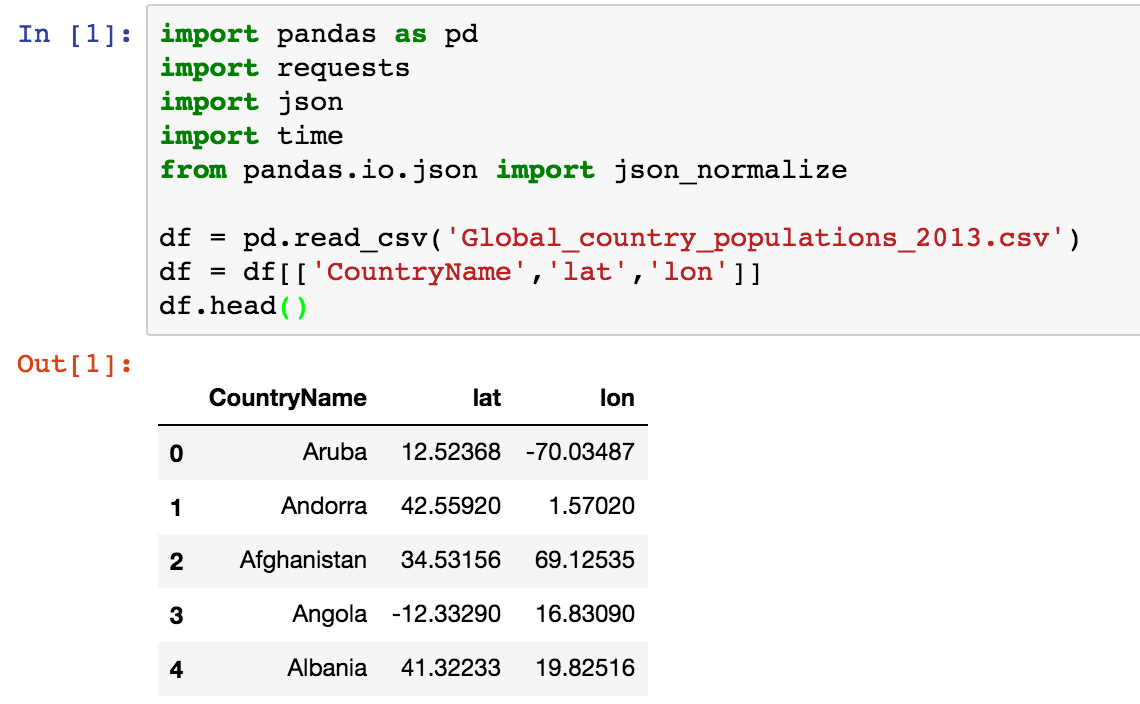

From pyspark.sql.types import StructType, ArrayTypedef flatten(schema, prefix=None):fields = for field in schema.fields:name = prefix + '.' + field.name if prefix else field.namedtype = field.dataTypeif isinstance(dtype, ArrayType):dtype = dtype.elementTypeif isinstance(dtype, StructType):fields += flatten(dtype, prefix=name)else:fields.append(name)return fieldsdf.select(flatten(df.schema)).show. Here is a function that is doing what you want and that can deal with multiple nested columns containing columns with same name, with a prefix: from pyspark.sql import functions as Fdef flattendf(nesteddf):flatcols = c0 for c in nesteddf.dtypes if c1:6!= 'struct'nestedcols = c0 for c in nesteddf.dtypes if c1:6 'struct'flatdf = nesteddf.select(flatcols +F.col(nc+'.'